Local Gödel Environment Setup with KIND

使用KIND设置本地哥德尔环境

This guide will walk you through how to set up the Gödel Unified Scheduling system.

本指南将指导您如何设置哥德尔统一调度系统。

One-Step Cluster Bootstrap & Installation

一步式集群引导和安装

We provided a quick way to help you try Gödel on your local machine, which will set up a kind cluster locally and deploy necessary crds, clusterrole and rolebindings

我们提供了一种快速的方法来帮助您在本地计算机上尝试Gödel,它将在本地设置一个类集群,并部署必要的crd、clusterrole和rolebindings

Prerequisites

先决条件

Please make sure the following dependencies are installed.

请确保安装了以下依赖项。

- kubectl >= v1.19

- docker >= 19.03

- kind >= v0.17.0

- go >= v1.21.4

- kustomize >= v4.5.7

1. Clone the Gödel repo to your machine

将哥德尔仓库克隆到您的计算机上

$ git clone https://github.com/kubewharf/godel-scheduler

2. Change to the Gödel directory

切换到哥德尔目录

$ cd godel

3. Bootstrap the cluster and install Gödel components

引导集群并安装哥德尔组件

$ make local-up

This command will complete the following steps:

-

Build Gödel image locally;

-

Start a Kubernetes cluster using Kind;

-

Installs the Gödel control-plane components on the cluster.

此命令将完成以下步骤:

1.在当地树立哥德尔形象;

2.使用Kind启动Kubernetes集群;

3.在仪表盘上安装哥德尔控制平面组件。

Manual Installation

手动安装

If you have an existing Kubernetes cluster, please follow the steps below to install Gödel.

如果您有一个现有的Kubernetes集群,请按照以下步骤安装Gödel。

1. Build Gödel image

1.塑造哥德尔形象

make docker-images

2. Load Gödel image to your cluster

2.将哥德尔图像加载到集群

For example, if you are using Kind

例如,如果您正在使用Kind

kind load docker-image godel-local:latest --name <cluster-name> --nodes <control-plane-nodes>

3. Create Gödel components in the cluster

3.在集群中创建哥德尔组件

kustomize build manifests/base/ | kubectl apply -f -

#!/bin/bash

# error on exit

set -e

# Main of the script

DOCKERFILE_DIR="docker/"

LOCAL_DOCKERFILE="godel-local.Dockerfile"

echo "Building docker image(s)..."

docker container prune -f || true;

docker image prune -f || true

function cleanup_godel_images() {

for i in $(docker images | grep "${1}" | awk "{print \$3}");

do

docker rmi "$i" -f;

done

}

build_image() {

local file=${1}

REPO=$(basename "$file");

REPO=${REPO%.*};

# Check if we are in a Git repository

if [ -d ".git" ]; then

REV=$(git log --pretty=format:'%h' -n 1)

TAG="${REPO}:${REV}"

else

# Use a fixed tag if not in a Git repository

REV="non-git-commit"

TAG="${REPO}:${REV}"

echo "Warning: Building image with non-Git tag '${TAG}' because this is not a Git repository."

fi

cleanup_godel_images "${REPO}"

docker build -t "${TAG}" -f "$file" ./;

docker tag "${TAG}" "${REPO}:latest"

}

for file in $(find "$DOCKERFILE_DIR" -name *.Dockerfile);

do

build_image "${file}"

done;

Quickstart - Job Level Affinity

快速入门-工作级别亲和力

This Quickstart guide provides a step-by-step tutorial on how to effectively use the job-level affinity feature for podgroups, with a focus on both preferred and required affinity types. For comprehensive information about this feature, please consult the Job Level Affinity Design Document.

<TODO Add the design doc when it’s ready>

本快速入门指南提供了一个分步教程,介绍如何有效地使用播客组的作业级关联功能,重点介绍首选和必需的关联类型。有关此功能的全面信息,请参阅工作级亲和力设计文档。

<TODO准备好后添加设计文档>

Local Cluster Bootstrap & Installation

本地集群引导和安装

To try out this feature, we would need to set up a few labels for the Kubernetes cluster nodes. We provided a make command for you to boostrap such a cluster locally using KIND.

要尝试此功能,我们需要为Kubernetes集群节点设置一些标签。我们为您提供了一个make命令,用于使用KIND在本地对这样的集群进行助推。

make local-up-labels

Affinity-Related Configurations

亲和性相关配置

Node

节点

In our sample YAML for KIND, we defined ‘mainnet’ and ‘micronet’ as custom node labels. These labels are employed to simulate real-world production environments, specifically regarding the network configurations of nodes.

在我们的KIND示例YAML中,我们将“主网”和“微网”定义为自定义节点标签。这些标签用于模拟现实世界的生产环境,特别是关于节点的网络配置。

- role: worker

image: kindest/node:v1.21.1

labels:

micronet: 10.76.65.0

mainnet: 10.76.0.0

PodGroup

In our sample YAML for podgroup, we specified ‘podGroupAffinity’. This configuration stipulates that pods belonging to this podgroup should be scheduled on nodes within the same ‘mainnet’. Additionally, there’s a preference to schedule them on nodes sharing the same ‘micronet’.

在我们的podgroup示例YAML中,我们指定了“podGroupAffinity”。此配置规定,属于此Pod组的Pod应安排在同一“主网”内的节点上。此外,人们更倾向于将它们安排在共享相同“微网络”的节点上。

apiVersion: scheduling.godel.kubewharf.io/v1alpha1

kind: PodGroup

metadata:

generation: 1

name: nginx

spec:

affinity:

podGroupAffinity:

preferred:

- topologyKey: micronet

required:

- topologyKey: mainnet

minMember: 10

scheduleTimeoutSeconds: 3000

Deployment

Specify the podgroup name in pod spec.

在pod规范中指定pod组名称。

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx

spec:

replicas: 10

selector:

matchLabels:

name: nginx

template:

metadata:

name: nginx

labels:

name: nginx

godel.bytedance.com/pod-group-name: "nginx"

annotations:

godel.bytedance.com/pod-group-name: "nginx"

spec:

schedulerName: godel-scheduler

containers:

- name: nginx

image: nginx

imagePullPolicy: IfNotPresent

resources:

limits:

cpu: 1

requests:

cpu: 1

Use Job Level Affinity in Scheduling

在调度中使用作业级别相关性

First, let’s check out the labels for nodes in the cluster.

首先,让我们查看集群中节点的标签。

$ kubectl get nodes --show-labels

NAME STATUS ROLES AGE VERSION LABELS

godel-demo-labels-control-plane Ready control-plane,master 37m v1.21.1 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=godel-demo-labels-control-plane,kubernetes.io/os=linux,node-role.kubernetes.io/control-plane=,node-role.kubernetes.io/master=,node.kubernetes.io/exclude-from-external-load-balancers=

godel-demo-labels-worker Ready <none> 36m v1.21.1 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=godel-demo-labels-worker,kubernetes.io/os=linux,mainnet=10.76.0.0,micronet=10.76.64.0,subCluster=subCluster-a

godel-demo-labels-worker2 Ready <none> 36m v1.21.1 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=godel-demo-labels-worker2,kubernetes.io/os=linux,mainnet=10.25.0.0,micronet=10.25.162.0,subCluster=subCluster-b

godel-demo-labels-worker3 Ready <none> 36m v1.21.1 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=godel-demo-labels-worker3,kubernetes.io/os=linux,mainnet=10.76.0.0,micronet=10.76.65.0,subCluster=subCluster-a

godel-demo-labels-worker4 Ready <none> 36m v1.21.1 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=godel-demo-labels-worker4,kubernetes.io/os=linux,mainnet=10.76.0.0,micronet=10.76.64.0,subCluster=subCluster-a

godel-demo-labels-worker5 Ready <none> 36m v1.21.1 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=godel-demo-labels-worker5,kubernetes.io/os=linux,mainnet=10.53.0.0,micronet=10.53.16.0,subCluster=subCluster-a

godel-demo-labels-worker6 Ready <none> 36m v1.21.1 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=godel-demo-labels-worker6,kubernetes.io/os=linux,mainnet=10.57.0.0,micronet=10.57.111.0,subCluster=subCluster-b

- godel-demo-labels-worker and godel-demo-labels-worker4 share the same ‘micronet’;

- 哥德尔演示标签工人和哥德尔demo-labels-worker4**共享相同的“微网”;

- godel-demo-labels-worker, godel-demo-labels-worker-3, and godel-demo-labels-worker4 share the same ‘mainnet’.

- 哥德尔演示标签worker、godel-demo-labels-worker-3和godel-demo-labels-worker4共享同一个“主网”。

Second, create the podgroup and deployment.

其次,创建podgroup和部署。

$ kubectl apply -f manifests/quickstart-feature-examples/job-level-affinity/podGroup.yaml

podgroup.scheduling.godel.kubewharf.io/nginx created

$ kubectl apply -f manifests/quickstart-feature-examples/job-level-affinity/deployment.yaml

deployment.apps/nginx created

Third, check the scheduling result.

第三,检查调度结果。

$ kubectl get pods -l name=nginx -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-68fc9649cc-5pdb7 1/1 Running 0 8s 10.244.2.21 godel-demo-labels-worker <none> <none>

nginx-68fc9649cc-b26tk 1/1 Running 0 8s 10.244.2.19 godel-demo-labels-worker <none> <none>

nginx-68fc9649cc-bvvx6 1/1 Running 0 8s 10.244.2.18 godel-demo-labels-worker <none> <none>

nginx-68fc9649cc-dtxqn 1/1 Running 0 8s 10.244.2.23 godel-demo-labels-worker <none> <none>

nginx-68fc9649cc-hh5pr 1/1 Running 0 8s 10.244.3.34 godel-demo-labels-worker4 <none> <none>

nginx-68fc9649cc-jt8q9 1/1 Running 0 8s 10.244.3.35 godel-demo-labels-worker4 <none> <none>

nginx-68fc9649cc-l8j2s 1/1 Running 0 8s 10.244.2.20 godel-demo-labels-worker <none> <none>

nginx-68fc9649cc-t9fb8 1/1 Running 0 8s 10.244.3.33 godel-demo-labels-worker4 <none> <none>

nginx-68fc9649cc-vcjm7 1/1 Running 0 8s 10.244.2.17 godel-demo-labels-worker <none> <none>

nginx-68fc9649cc-wplt7 1/1 Running 0 8s 10.244.2.22 godel-demo-labels-worker <none> <none>

The pods have been scheduled to godel-demo-labels-worker and godel-demo-labels-worker4, which share the same ‘micronet’. We achieved this result because the resources are sufficient.

这些吊舱已被安排为godel演示标签worker和**godel-demo-labels-worker4*,它们共享相同的“微网”。我们之所以取得这一成果,是因为资源充足。

Next, let’s try with scheduling a podgroup with minMember equal to 15, with the else of the configuration remains the same.

接下来,让我们尝试调度一个minMember等于15的podgroup,其他配置保持不变。

- In

manifests/quickstart-feature-examples/job-level-affinity/podGroup-2.yaml, notice the minMember is 15. - 在“manifests/quickstart features examples/job-level affinity/podGroup-2.yaml”中,注意minMember是15。

minMember: 15

- In

manifests/quickstart-feature-examples/job-level-affinity/deployment-2.yaml, notice the replicas is 15. - 在“manifests/quickstart features examples/job-level affinity/deployment-2.yaml”中,请注意副本为15。

spec:

replicas: 15

Apply the two yaml files and check the scheduling result

应用两个yaml文件并检查调度结果

# Clean up the env first

$ kubectl delete -f manifests/quickstart-feature-examples/job-level-affinity/deployment.yaml && kubectl delete -f manifests/quickstart-feature-examples/job-level-affinity/podGroup.yaml

deployment.apps "nginx" deleted

podgroup.scheduling.godel.kubewharf.io "nginx" deleted

$ kubectl apply -f manifests/quickstart-feature-examples/job-level-affinity/podGroup-2.yaml

podgroup.scheduling.godel.kubewharf.io/nginx-2 created

$ kubectl apply -f manifests/quickstart-feature-examples/job-level-affinity/deployment-2.yaml

deployment.apps/nginx-2 created

$ kubectl get pods -l name=nginx-2 -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-2-68fc9649cc-2l2v7 1/1 Running 0 6s 10.244.6.11 godel-demo-labels-worker3 <none> <none>

nginx-2-68fc9649cc-6mz78 1/1 Running 0 6s 10.244.2.13 godel-demo-labels-worker <none> <none>

nginx-2-68fc9649cc-6nm92 1/1 Running 0 6s 10.244.6.12 godel-demo-labels-worker3 <none> <none>

nginx-2-68fc9649cc-6qmmx 1/1 Running 0 6s 10.244.2.14 godel-demo-labels-worker <none> <none>

nginx-2-68fc9649cc-cfd75 1/1 Running 0 6s 10.244.2.11 godel-demo-labels-worker <none> <none>

nginx-2-68fc9649cc-fg87r 1/1 Running 0 6s 10.244.3.28 godel-demo-labels-worker4 <none> <none>

nginx-2-68fc9649cc-gss27 1/1 Running 0 6s 10.244.3.26 godel-demo-labels-worker4 <none> <none>

nginx-2-68fc9649cc-hbpwt 1/1 Running 0 6s 10.244.6.15 godel-demo-labels-worker3 <none> <none>

nginx-2-68fc9649cc-jkdqx 1/1 Running 0 6s 10.244.3.27 godel-demo-labels-worker4 <none> <none>

nginx-2-68fc9649cc-n498k 1/1 Running 0 6s 10.244.6.9 godel-demo-labels-worker3 <none> <none>

nginx-2-68fc9649cc-q5h5r 1/1 Running 0 6s 10.244.2.12 godel-demo-labels-worker <none> <none>

nginx-2-68fc9649cc-qjsgk 1/1 Running 0 6s 10.244.6.14 godel-demo-labels-worker3 <none> <none>

nginx-2-68fc9649cc-vdp2v 1/1 Running 0 6s 10.244.6.13 godel-demo-labels-worker3 <none> <none>

nginx-2-68fc9649cc-vpzlj 1/1 Running 0 6s 10.244.6.10 godel-demo-labels-worker3 <none> <none>

nginx-2-68fc9649cc-z2ffg 1/1 Running 0 6s 10.244.3.29 godel-demo-labels-worker4 <none> <none>

The pods have been scheduled to godel-demo-labels-worker, godel-demo-labels-worker3 and godel-demo-labels-worker4. godel-demo-labels-worker3 was used here because the resources on worker and worker4 were not sufficient. And worker3 met the requirement of the same mainnet.

这些豆荚已被安排为哥德尔演示标签工人、哥德尔-德莫标签工人和戈德尔-德莫尔标签工人**这里使用godel-demo-labels-worker3是因为worker和worker4上的资源不足。worker3满足同一主网的要求。

Clean up the environment.

清理环境。

$ kubectl delete -f manifests/quickstart-feature-examples/job-level-affinity/podGroup-2.yaml && kubectl delete -f manifests/quickstart-feature-examples/job-level-affinity/deployment-2.yaml

podgroup.scheduling.godel.kubewharf.io "nginx-2" deleted

deployment.apps "nginx-2" deleted

Quickstart - SubCluster Concurrent Scheduling

快速入门-子集群并发调度

This Quickstart guide demonstrates how to implement concurrent scheduling at the SubCluster level. Each SubCluster, defined by node labels, will possess its own distinct scheduling workflow. These workflows will execute simultaneously, ensuring efficient task management across the system.

本快速入门指南演示了如何在子集群级别实现并发调度。由节点标签定义的每个子集群都将拥有自己独特的调度工作流。这些工作流将同时执行,确保整个系统的高效任务管理。

Local Cluster Bootstrap & Installation

本地集群引导和安装

To try out this feature, we would need to set up a few labels for the Kubernetes cluster nodes. We provided a make command for you to boostrap such a cluster locally using KIND.

要尝试此功能,我们需要为Kubernetes集群节点设置一些标签。我们为您提供了一个make命令,用于使用KIND在本地对这样的集群进行助推。

make local-up-labels

Related Configurations

相关配置

Node

In our sample YAML for KIND, we defined ‘subCluster’ as custom node labels. These labels are employed to simulate real-world production environments, where nodes can be classified by different business scenarios.

在我们的KIND示例YAML中,我们将“subCluster”定义为自定义节点标签。这些标签用于模拟现实世界的生产环境,其中节点可以按不同的业务场景进行分类。

- role: worker

image: kindest/node:v1.21.1

labels:

subCluster: subCluster-a

- role: worker

image: kindest/node:v1.21.1

labels:

subCluster: subCluster-b

Godel Scheduler Configuration

Godel调度程序配置

We defined ‘subCluster’ as the subClusterKey in the Godel Scheduler Configuration. This is corresponding to the node label key above.

我们在Godel调度程序配置中将“subCluster”定义为subClusterKey。这对应于上面的节点标签键。

apiVersion: godelscheduler.config.kubewharf.io/v1beta1

kind: GodelSchedulerConfiguration

subClusterKey: subCluster

Deployment

In the sample deployment file, one deployment specifies ‘subCluster: subCluster-a’ in its nodeSelector, while the other deployment specifies ‘subCluster: subCluster-b’.

在示例部署文件中,一个部署在其nodeSelector中指定“subCluster:subCluster-a”,而另一个部署指定“subCluster:subCluster-b”。

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-a

spec:

...

spec:

schedulerName: godel-scheduler

nodeSelector:

subCluster: subCluster-a

...

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-b

spec:

...

spec:

schedulerName: godel-scheduler

nodeSelector:

subCluster: subCluster-b

...

Concurrent Scheduling Quick Demo

并发调度快速演示

Apply the deployment YAML files.

应用部署YAML文件。

$ kubectl apply -f manifests/quickstart-feature-examples/concurrent-scheduling/deployment.yaml

deployment.apps/nginx-a created

deployment.apps/nginx-b created

Check the scheduler logs.

检查调度程序日志。

$ kubectl get pods -n godel-system

NAME READY STATUS RESTARTS AGE

binder-858c974d4c-bhbtv 1/1 Running 0 123m

dispatcher-5b8f5cf5c6-fcm8d 1/1 Running 0 123m

scheduler-85f4556799-6ltv5 1/1 Running 0 123m

$ kubectl logs scheduler-85f4556799-6ltv5 -n godel-system

...

I0108 22:38:36.183175 1 util.go:90] "Ready to try and schedule the next unit" numberOfPods=1 unitKey="SinglePodUnit/default/nginx-b-69ccdbff54-fvpg6"

I0108 22:38:36.183190 1 unit_scheduler.go:280] "Attempting to schedule unit" switchType="GTSchedule" subCluster="subCluster-b" unitKey="SinglePodUnit/default/nginx-b-69ccdbff54-fvpg6"

I0108 22:38:36.183229 1 util.go:90] "Ready to try and schedule the next unit" numberOfPods=1 unitKey="SinglePodUnit/default/nginx-a-649b85664f-2tjvp"

I0108 22:38:36.183246 1 unit_scheduler.go:280] "Attempting to schedule unit" switchType="GTSchedule" subCluster="subCluster-a" unitKey="SinglePodUnit/default/nginx-a-649b85664f-2tjvp"

I0108 22:38:36.183390 1 unit_scheduler.go:327] "Attempting to schedule unit in this node group" switchType="GTSchedule" subCluster="subCluster-b" unitKey="SinglePodUnit/default/nginx-b-69ccdbff54-fvpg6" nodeGroup="[]"

...

From the log snippet above, it’s clear that both pods, nginx-a (subCluster: subCluster-a) and nginx-b (subCluster: subCluster-b), are overlapping.

从上面的日志片段中可以清楚地看出,两个Pod nginx-a(subCluster:subCluster-a)和nginx-b(subCluster/subCluster-b)是重叠的。

Clean up the environment

清理环境

$ kubectl delete -f manifests/quickstart-feature-examples/concurrent-scheduling/deployment.yaml

deployment.apps "nginx-a" deleted

deployment.apps "nginx-b" deleted

Quickstart - Gang Scheduling

快速入门-帮派调度

Introduction

介绍

In this quickstart guide, we’ll explore Gang Scheduling, a feature that ensures an “all or nothing” approach to scheduling pods.

Gödel scheduler treats all pods under a job (PodGroup) as a unified entity during scheduling attempts.

This approach eliminates scenarios where a job has “partially reserved resources”, effectively mitigating resource deadlocks between multiple jobs and making it a valuable tool for managing complex scheduling scenarios in your cluster.

This guide will walk you through setting up and using Gang Scheduling.

在本快速入门指南中,我们将探讨Gang调度,这是一个确保采用“全有或全无”方法调度Pod的功能。

在调度尝试期间,哥德尔调度器将作业(PodGroup)下的所有Pod视为一个统一的实体。

这种方法消除了作业具有“部分保留资源”的情况,有效地缓解了多个作业之间的资源死锁,使其成为管理集群中复杂调度场景的有价值的工具。

本指南将引导您完成Gang调度的设置和使用。

Local Cluster Bootstrap & Installation

本地集群引导和安装

If you do not have a local Kubernetes cluster installed with Godel yet, please refer to the Cluster Setup Guide.

如果您还没有使用Godel安装本地Kubernetes集群,请参阅[cluster Setup Guide](kind-cluster-Setup.md)。

Related Configurations

相关配置

Below are the YAML contents and descriptions for the related configuration used in this guide:

以下是本指南中使用的相关配置的YAML内容和描述:

Pod Group Configuration

Pod组配置

The Pod Group configuration specifies the minimum number of members (minMember) required and the scheduling timeout in seconds (scheduleTimeoutSeconds).

Pod Group配置指定了所需的最小成员数(“minMember”)和以秒为单位的调度超时(“scheduleTimeoutSeconds”)。

apiVersion: scheduling.godel.kubewharf.io/v1alpha1

kind: PodGroup

metadata:

generation: 1

name: test-podgroup

spec:

minMember: 2

scheduleTimeoutSeconds: 300

Pod Configuration

Pod配置

This YAML configuration defines the first child pod (pod-1) within the Pod Group. It includes labels and annotations required for Gang Scheduling.

此YAML配置定义pod组中的第一个子pod(pod-1)。它包括Gang调度所需的标签和注释。

apiVersion: v1

kind: Pod

metadata:

name: pod-1

labels:

name: nginx

# Pods must have this label set

godel.bytedance.com/pod-group-name: "test-podgroup"

annotations:

# Pods must have this annotation set

godel.bytedance.com/pod-group-name: "test-podgroup"

spec:

schedulerName: godel-scheduler

containers:

- name: test

image: nginx

imagePullPolicy: IfNotPresent

The second child pod only varies in name.

第二个子吊舱只是名称不同。

apiVersion: v1

kind: Pod

metadata:

name: pod-2

labels:

name: nginx

# Pods must have this label set

godel.bytedance.com/pod-group-name: "test-podgroup"

annotations:

# Pods must have this annotation set

godel.bytedance.com/pod-group-name: "test-podgroup"

spec:

schedulerName: godel-scheduler

containers:

- name: test

image: nginx

imagePullPolicy: IfNotPresent

Using Gang Scheduling

使用帮派调度

- Create a Pod Group:

- 创建Pod组:

To start using Gang Scheduling, create a Pod Group using the following command:

要开始使用Gang调度,请使用以下命令创建Pod组:

$ kubectl apply -f manifests/quickstart-feature-examples/gang-scheduling/podgroup.yaml

podgroup.scheduling.godel.kubewharf.io/test-podgroup created

$ kubectl get podgroups

NAME AGE

test-podgroup 11s

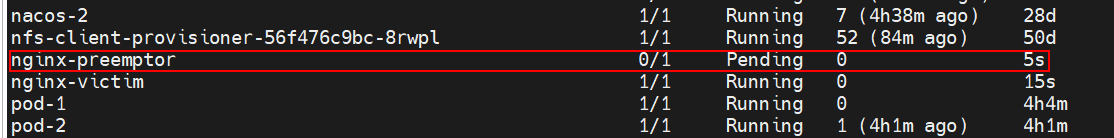

- Create Child Pod 1 of the Pod Group:

- 创建Pod组的子Pod 1:

Now, let’s create the first child pod within the Pod Group.

Keep in mind that due to Gang Scheduling, this pod will initially be in a “Pending” state until the minMember requirement of the Pod Group is satisfied.

Gang Scheduling ensures that pods are not scheduled until the specified number of pods (in this case, 2) is ready to be scheduled together.

现在,让我们在pod组中创建第一个子pod。

请记住,由于Gang调度,此pod最初将处于“待定”状态,直到pod组的“minMember”要求得到满足。

Gang调度确保在指定数量的Pod(在本例中为2个)准备好一起调度之前,不会对Pod进行调度。

Use the following command to create the first child pod:

使用以下命令创建第一个子pod:

$ kubectl apply -f manifests/quickstart-feature-examples/gang-scheduling/pod-1.yaml

pod/pod-1 created

$ kubectl get pods

NAME READY STATUS RESTARTS AGE

pod-1 0/1 Pending 0 18s

- Create Child Pod 2 of the Pod Group:

- 创建Pod组的子Pod 2:

Now that we have created the first child pod and it’s in a “Pending” state, let’s proceed to create the second child pod.

Both pods will become “Running” simultaneously once the minMember requirement of the Pod Group is fulfilled.

现在我们已经创建了第一个子pod,并且它处于“待定”状态,让我们继续创建第二个子pod。

一旦Pod组的“minMember”要求得到满足,两个Pod将同时“运行”。

Similarly, create the second child pod within the same Pod Group:

同样,在同一pod组中创建第二个子pod:

$ kubectl apply -f manifests/quickstart-feature-examples/gang-scheduling/pod-2.yaml

pod/pod-2 created

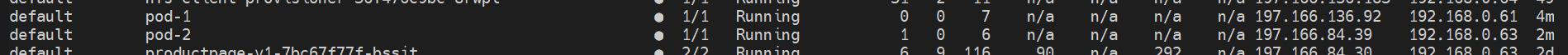

$ kubectl get pods

NAME READY STATUS RESTARTS AGE

pod-1 1/1 Running 0 24s

pod-2 1/1 Running 0 2s

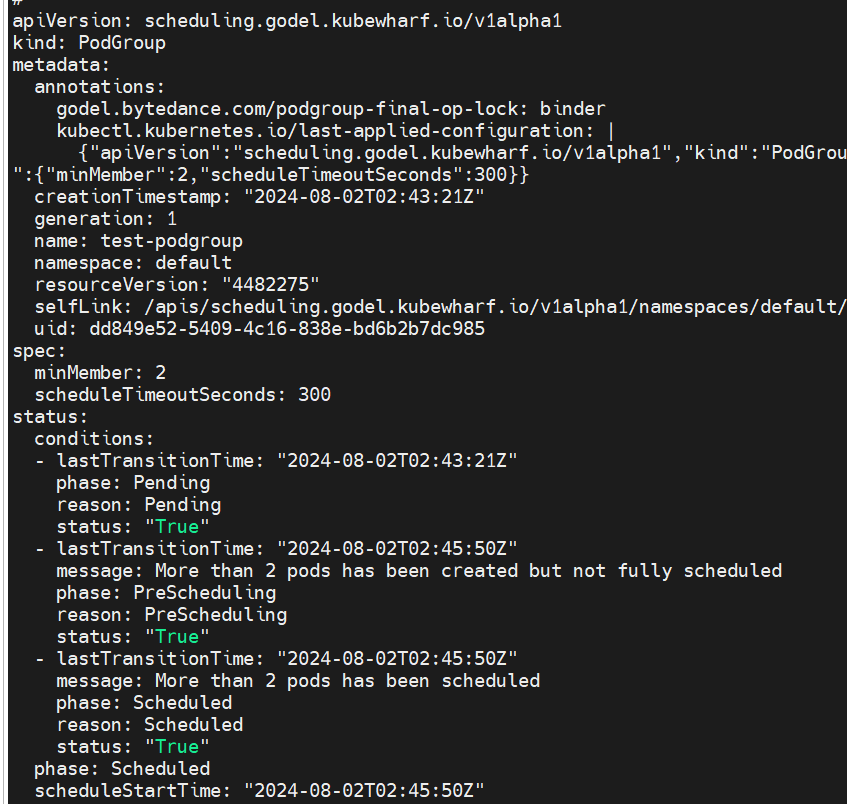

- View Pod Group Status:

- 查看Pod组状态:

You can check the status of the Pod Group using the following command:

您可以使用以下命令检查Pod组的状态:

$ kubectl get podgroup test-podgroup -o yaml

apiVersion: scheduling.godel.kubewharf.io/v1alpha1

kind: PodGroup

metadata:

annotations:

godel.bytedance.com/podgroup-final-op-lock: binder

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"scheduling.godel.kubewharf.io/v1alpha1","kind":"PodGroup","metadata":{"annotations":{},"generation":1,"name":"test-podgroup","namespace":"default"},"spec":{"minMember":2,"scheduleTimeoutSeconds":300}}

creationTimestamp: "2024-01-11T00:28:17Z"

generation: 1

name: test-podgroup

namespace: default

resourceVersion: "722"

selfLink: /apis/scheduling.godel.kubewharf.io/v1alpha1/namespaces/default/podgroups/test-podgroup

uid: fe2931c4-fa6b-4770-8ef2-589966fce3f7

spec:

minMember: 2

scheduleTimeoutSeconds: 300

status:

conditions:

- lastTransitionTime: "2024-01-11T00:28:22Z"

phase: Pending

reason: Pending

status: "True"

- lastTransitionTime: "2024-01-11T00:28:36Z"

message: More than 2 pods has been created but not fully scheduled

phase: PreScheduling

reason: PreScheduling

status: "True"

- lastTransitionTime: "2024-01-11T00:28:36Z"

message: More than 2 pods has been scheduled

phase: Scheduled

reason: Scheduled

status: "True"

phase: Scheduled

scheduleStartTime: "2024-01-11T00:28:37Z"

Local Gödel Environment Setup with KIND

使用KIND设置本地哥德尔环境

This guide will walk you through how to set up the Gödel Unified Scheduling system.

本指南将指导您如何设置哥德尔统一调度系统。

One-Step Cluster Bootstrap & Installation

一步式集群引导和安装

We provided a quick way to help you try Gödel on your local machine, which will set up a kind cluster locally and deploy necessary crds, clusterrole and rolebindings

我们提供了一种快速的方法来帮助您在本地计算机上尝试Gödel,它将在本地设置一个类集群,并部署必要的crd、clusterrole和rolebindings

Prerequisites

Please make sure the following dependencies are installed.

- kubectl >= v1.19

- docker >= 19.03

- kind >= v0.17.0

- go >= v1.21.4

- kustomize >= v4.5.7

1. Clone the Gödel repo to your machine

$ git clone https://github.com/kubewharf/godel-scheduler

2. Change to the Gödel directory

$ cd godel

3. Bootstrap the cluster and install Gödel components

引导集群并安装哥德尔组件

$ make local-up

This command will complete the following steps:

- Build Gödel image locally;

- Start a Kubernetes cluster using Kind;

- Installs the Gödel control-plane components on the cluster.

此命令将完成以下步骤:

1.在当地树立哥德尔形象;

2.使用Kind启动Kubernetes集群;

3.在仪表盘上安装哥德尔控制平面组件。

Manual Installation

If you have an existing Kubernetes cluster, please follow the steps below to install Gödel.

如果您有一个现有的Kubernetes集群,请按照以下步骤安装Gödel。

1. Build Gödel image

塑造哥德尔形象

make docker-images

2. Load Gödel image to your cluster

将哥德尔图像加载到集群

For example, if you are using Kind

kind load docker-image godel-local:latest --name <cluster-name> --nodes <control-plane-nodes>

3. Create Gödel components in the cluster

在集群中创建哥德尔组件

kustomize build manifests/base/ | kubectl apply -f -

Quickstart - Preemption

快速入门-优先购买

Within the Gödel Scheduling System, Pod preemption emerges as a key feature designed to uphold optimal resource utilization.

If a Pod cannot be scheduled, the Gödel scheduler tries to preempt (evict) lower priority Pods to make scheduling of the pending Pod possible.

This is a Quickstart guide that will walk you through how preemption works in the Gödel Scheduling System.

在哥德尔调度系统中,吊舱抢占成为一个关键特征,旨在保持最佳的资源利用率。

如果无法调度Pod,哥德尔调度器会尝试抢占(驱逐)优先级较低的Pod,以使未决Pod的调度成为可能。

这是一个快速入门指南,将引导您了解抢占在哥德尔调度系统中的工作原理。

Local Cluster Bootstrap & Installation

本地集群引导和安装

If you do not have a local Kubernetes cluster installed with Godel yet, please refer to the Cluster Setup Guide for creating a local KIND cluster installed with Gödel.

如果您还没有使用Godel安装本地Kubernetes集群,请参阅[cluster Setup Guide](kind-cluster-Setup.md)以创建使用Gödel安装的本地kind集群。

How Preemption Works

优先购买权如何运作

When there is no more resource for scheduling a pending Pod, preemption comes into picture.

Gödel scheduler tries to preempt (evict) lower priority Pods to make scheduling of the pending Pod possible, with a few of protection strategies being respected.

当没有更多资源来调度挂起的Pod时,抢占就会出现。

哥德尔调度器试图抢占(驱逐)优先级较低的Pod,以使未决Pod的调度成为可能,并遵守一些保护策略。

Quickstart Scenario

快速入门场景

To better illustrate the preemption features, let’s assume there is one node with less than 8 CPU cores available for scheduling.

为了更好地说明抢占功能,让我们假设有一个节点的CPU核数少于8个,可用于调度。

Note: The Capacity and Allocatable of worker node depend on your own Docker resources configuration. Thus they are not guaranteed to be exactly the same with this guide. To try out this feature locally, you should tune the resources configuration in the example yaml files based on your own setup. For example, the author configured 8 CPU for Docker resources preference, so the worker node has 8 CPU in the guide.

**注意:**工作节点的“容量”和“可分配”取决于您自己的Docker资源配置。因此,不能保证它们与本指南完全相同。要在本地尝试此功能,您应该根据自己的设置调整示例yaml文件中的资源配置。例如,作者为Docker资源偏好配置了8个CPU,因此工作节点在指南中有8个CPU。

$ kubectl describe node godel-demo-default-worker

Name: godel-demo-default-worker

...

Capacity:

cpu: 8

...

Allocatable:

cpu: 8

...

...

Non-terminated Pods: (2 in total)

Namespace Name CPU Requests CPU Limits Memory Requests Memory Limits Age

--------- ---- ------------ ---------- --------------- ------------- ---

kube-system kindnet-cnvtd 100m (2%) 100m (2%) 50Mi (0%) 50Mi (0%) 3d23h

kube-system kube-proxy-6fh4g 0 (0%) 0 (0%) 0 (0%) 0 (0%) 3d23h

Allocated resources:

(Total limits may be over 100 percent, i.e., overcommitted.)

Resource Requests Limits

-------- -------- ------

cpu 100m (2%) 100m (2%)

...

Events: <none>

Basic Preemption

基本优先购买权

Gödel Scheduling System provides basic preemption features that are comparable with the offering of the Kubernetes scheduler.

Gödel调度系统提供了与Kubernetes调度器相当的基本抢占功能。

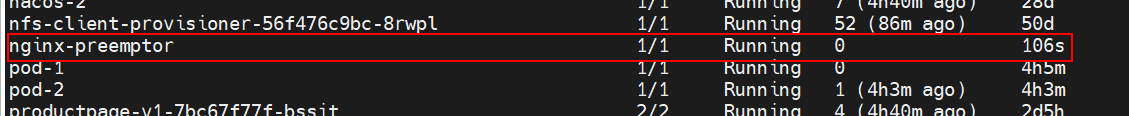

Priority-based Preemption

基于优先级的优先购买权

-

Create a pod with a lower priority, which requests 6 CPU cores.

-

创建一个优先级较低的pod,它需要6个CPU核心。

--- apiVersion: scheduling.k8s.io/v1 kind: PriorityClass metadata: name: low-priority annotations: "godel.bytedance.com/can-be-preempted": "true" value: 80 description: "a priority class with low priority that can be preempted" --- apiVersion: v1 kind: Pod metadata: name: nginx-victim annotations: "godel.bytedance.com/can-be-preempted": "true" spec: schedulerName: godel-scheduler priorityClassName: low-priority containers: - name: nginx-victim image: nginx imagePullPolicy: IfNotPresent resources: limits: cpu: 6 requests: cpu: 6$ kubectl get pod NAME READY STATUS RESTARTS AGE nginx-victim 1/1 Running 0 2s-

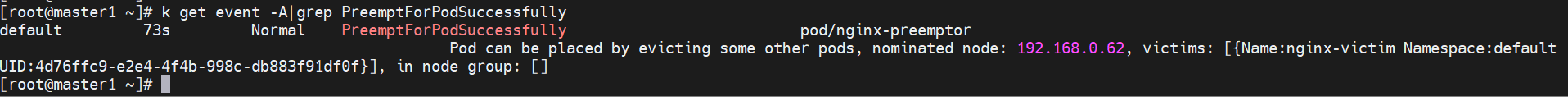

Create a pod with a higher priority, which requests 3 CPU core.

-

创建一个优先级更高的pod,它需要3个CPU核心。

--- apiVersion: scheduling.k8s.io/v1 kind: PriorityClass metadata: name: high-priority value: 100 description: "a priority class with a high priority" --- apiVersion: v1 kind: Pod metadata: name: nginx-preemptor spec: schedulerName: godel-scheduler priorityClassName: high-priority containers: - name: nginx-preemptor image: nginx imagePullPolicy: IfNotPresent resources: limits: cpu: 3 requests: cpu: 3Remember, there is only 8 CPU cores available. So, preemption will be triggered when the high-priority Pod gets scheduled.

记住,只有8个CPU核可用。因此,当高优先级Pod被调度时,将触发抢占。

$ kubectl get pod NAME READY STATUS RESTARTS AGE nginx-preemptor 1/1 Running 0 18s $ kubectl get event ... 0s Normal PreemptForPodSuccessfully pod/nginx-preemptor Pod can be placed by evicting some other pods, nominated node: godel-demo-default-worker, victims: [{Name:nginx-victim Namespace:default UID:b685ef99-20b8-43bb-9576-10d2ca09e2d6}], in node group: [] ...

As we can see, the pod with a lower priority was preempted to accommodate the pod with a higher priority.

正如我们所看到的,优先级较低的pod被抢占,以容纳优先级较高的pod。

-

Clean up the environment

-

清理环境

kubectl delete pod nginx-preemptor -

Protection with PodDisruptionBudget

使用PodDisruption预算进行保护

-

Create a 3-replica deployment with a lower priority, which requests 6 CPU cores in total. Meanwhile, a PodDisruptionBudget object with minAvailable being set to 3 is also created.

-

创建一个优先级较低的3副本部署,总共需要6个CPU核心。同时,还创建了一个minAvailable设置为3的PodDisruptionBudget对象。

--- apiVersion: scheduling.k8s.io/v1 kind: PriorityClass metadata: name: low-priority annotations: "godel.bytedance.com/can-be-preempted": "true" value: 80 description: "a priority class with low priority that can be preempted" --- apiVersion: policy/v1 kind: PodDisruptionBudget metadata: name: nginx-pdb spec: minAvailable: 3 selector: matchLabels: name: nginx --- apiVersion: apps/v1 kind: Deployment metadata: name: nginx-victim spec: replicas: 3 selector: matchLabels: name: nginx template: metadata: name: nginx-victim labels: name: nginx annotations: "godel.bytedance.com/can-be-preempted": "true" spec: schedulerName: godel-scheduler priorityClassName: low-priority containers: - name: test image: nginx imagePullPolicy: IfNotPresent resources: limits: cpu: 2 requests: cpu: 2$ kubectl get pod,deploy NAME READY STATUS RESTARTS AGE pod/nginx-victim-588f6db4bd-r99bq 1/1 Running 0 6s pod/nginx-victim-588f6db4bd-vv24v 1/1 Running 0 6s pod/nginx-victim-588f6db4bd-xdsht 1/1 Running 0 6s NAME READY UP-TO-DATE AVAILABLE AGE deployment.apps/nginx-victim 3/3 3 3 6s -

Create a pod with a higher priority, which requests 3 CPU core.

-

创建一个优先级更高的pod,它需要3个CPU核心。

--- apiVersion: scheduling.k8s.io/v1 kind: PriorityClass metadata: name: high-priority value: 100 description: "a priority class with a high priority" --- apiVersion: v1 kind: Pod metadata: name: nginx-preemptor spec: schedulerName: godel-scheduler priorityClassName: high-priority containers: - name: nginx-preemptor image: nginx imagePullPolicy: IfNotPresent resources: limits: cpu: 3 requests: cpu: 3In this case, preemption will not be triggered for scheduling the high-priority Pod above, due to the protection provided by the PodDisruptionBudget object.

在这种情况下,由于PodDisruptionBudget对象提供的保护,在调度上述高优先级Pod时不会触发抢占。

$ kubectl get pod,deploy NAME READY STATUS RESTARTS AGE pod/nginx-preemptor 0/1 Pending 0 34s pod/nginx-victim-588f6db4bd-r99bq 1/1 Running 0 109s pod/nginx-victim-588f6db4bd-vv24v 1/1 Running 0 109s pod/nginx-victim-588f6db4bd-xdsht 1/1 Running 0 109s NAME READY UP-TO-DATE AVAILABLE AGE deployment.apps/nginx-victim 3/3 3 3 109sBut, if we update the PodDisruptionBudget object by setting the minAvailable field to 2. The preemption will be triggered in the next scheduling cycle for the Pod with high priority.

但是,如果我们通过将minAvailable字段设置为2来更新PodDisruptionBudget对象。对于具有高优先级的Pod,抢占将在下一个调度周期中触发。

$ kubectl get pdb NAME MIN AVAILABLE MAX UNAVAILABLE ALLOWED DISRUPTIONS AGE nginx-pdb 2 N/A 0 5m22s $ kubectl get pod,deploy NAME READY STATUS RESTARTS AGE pod/nginx-preemptor 1/1 Running 0 6m25s pod/nginx-victim-588f6db4bd-p49vg 0/1 Pending 0 3m19s pod/nginx-victim-588f6db4bd-r99bq 1/1 Running 0 7m40s pod/nginx-victim-588f6db4bd-xdsht 1/1 Running 0 7m40s NAME READY UP-TO-DATE AVAILABLE AGE deployment.apps/nginx-victim 2/3 3 2 7m40sBased on the observation above, one replica of the low-priority deployment was preempted.

基于上述观察,低优先级部署的一个副本被抢占。

-

Clean up the environment

-

清理环境

kubectl delete pod nginx-preemptor && kubectl delete deploy nginx-victim && kubectl delete pdb nginx-pdb

Gödel-specific Preemption

哥德尔特定优先购买权

Apart from the basic preemption functionalities shown above, extra protection behaviors are also honored in Gödel Scheduling System.

除了上面显示的基本抢占功能外,哥德尔调度系统还支持额外的保护行为。

Preemptibility Annotation

优先购买性注释

Gödel Scheduling System introduces a customized annotation, "godel.bytedance.com/can-be-preempted": "true",

to enable the preemptibility of Pods. The preemptibility annotation specified either in the Pod object or the PriorityClass object will be honored.

Specifically, only Pods with the preemptibility being enabled can preempted in any cases. Otherwise, no preemption will happen.

哥德尔调度系统引入了一个定制的注释,“godel.bytedance.com/can be preceded”:“true”,

以实现Pod的可抢占性。Pod对象或PriorityClass对象中指定的抢占性注释将被遵守。

具体来说,在任何情况下,只有启用了可抢占性的Pod才能被抢占。否则,不会发生先发制人。

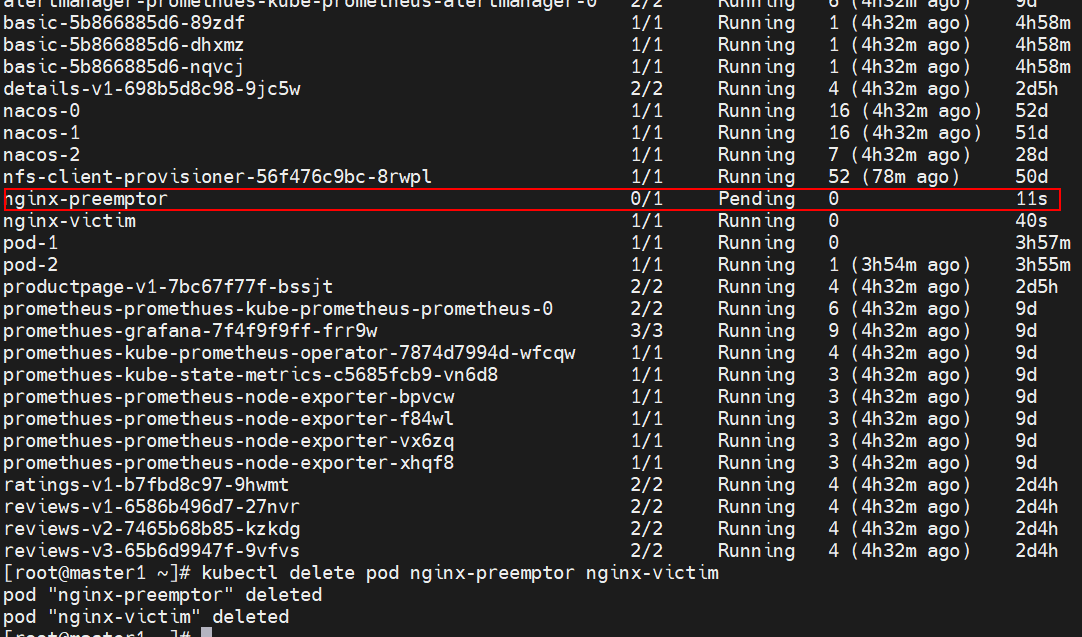

-

Create a Pod without the preemptibility being enabled.

-

在不启用抢占功能的情况下创建Pod。

--- apiVersion: scheduling.k8s.io/v1 kind: PriorityClass metadata: name: low-priority value: 80 description: "a priority class with low priority" --- apiVersion: v1 kind: Pod metadata: name: nginx-victim spec: schedulerName: godel-scheduler priorityClassName: low-priority containers: - name: nginx-victim image: nginx imagePullPolicy: IfNotPresent resources: limits: cpu: 6 requests: cpu: 6$ kubectl get pod NAME READY STATUS RESTARTS AGE nginx-victim 1/1 Running 0 2s -

Create a Pod with a higher priority.

-

创建具有更高优先级的Pod。

--- apiVersion: scheduling.k8s.io/v1 kind: PriorityClass metadata: name: high-priority value: 100 description: "a priority class with a high priority" --- apiVersion: v1 kind: Pod metadata: name: nginx-preemptor spec: schedulerName: godel-scheduler priorityClassName: high-priority containers: - name: nginx-preemptor image: nginx imagePullPolicy: IfNotPresent resources: limits: cpu: 3 requests: cpu: 3$ kubectl get pod NAME READY STATUS RESTARTS AGE nginx-preemptor 0/1 Pending 0 11s nginx-victim 1/1 Running 0 2m32sIn this case, preemption will not happen because the preemptibility is not enabled.

在这种情况下,由于未启用抢占功能,因此不会发生抢占。

-

Clean up the environment

-

清理环境

kubectl delete pod nginx-preemptor nginx-victim

Protection Duration

保护期限

Gödel Scheduling System also supports protecting preemptible Pods from preemption for at least a specified amount of time after it’s started up.

By leveraging the Pod annotation godel.bytedance.com/protection-duration-from-preemption,

users will be able to specify the protection duration in seconds.

哥德尔调度系统还支持在可抢占的Pod启动后至少在指定时间内保护其免受抢占。

通过利用Pod注释“godel.bytedance.com/protection duration from prevention”,

用户将能够以秒为单位指定保护持续时间。

-

Create a Pod with a 30-second protection duration.

-

创建一个保护时间为30秒的Pod。

--- apiVersion: scheduling.k8s.io/v1 kind: PriorityClass metadata: name: low-priority annotations: "godel.bytedance.com/can-be-preempted": "true" value: 80 description: "a priority class with low priority that can be preempted" --- apiVersion: v1 kind: Pod metadata: name: nginx-victim annotations: "godel.bytedance.com/protection-duration-from-preemption": "30" spec: schedulerName: godel-scheduler priorityClassName: low-priority containers: - name: nginx-victim image: nginx imagePullPolicy: IfNotPresent resources: limits: cpu: 6 requests: cpu: 6$ kubectl get pod NAME READY STATUS RESTARTS AGE nginx-victim 1/1 Running 0 3s -

Create a Pod with a higher priority.

-

创建具有更高优先级的Pod。

--- apiVersion: scheduling.k8s.io/v1 kind: PriorityClass metadata: name: high-priority value: 100 description: "a priority class with a high priority" --- apiVersion: v1 kind: Pod metadata: name: nginx-preemptor spec: schedulerName: godel-scheduler priorityClassName: high-priority containers: - name: nginx-preemptor image: nginx imagePullPolicy: IfNotPresent resources: limits: cpu: 3 requests: cpu: 3Within the protection duration, preemption will not be triggered.

在保护期内,不会触发抢占。

$ kubectl get pod NAME READY STATUS RESTARTS AGE nginx-preemptor 0/1 Pending 0 14s nginx-victim 1/1 Running 0 26s

Beyond that, preemption takes place eventually.

除此之外,先发制人最终会发生。

$ kubectl get pod NAME READY STATUS RESTARTS AGE nginx-preemptor 1/1 Running 0 78s

-

Clean up the environment

-

清理环境

kubectl delete pod nginx-preemptor

Wrap-up

总结

In this doc, we share a Quickstart guide about selected functionalities of preemption in Gödel Scheduling System.

More advanced preemption features/strategies as well as the corresponding technical deep-dives can be expected.

在本文档中,我们将分享一份关于哥德尔调度系统中优先购买功能的快速入门指南。

可以期待更高级的抢占功能/策略以及相应的技术深度探索。