备份etcd的数据

1、单master单etcd扩展到3master3etcd节点

2、涉及的

master节点的证书

- apiserver.crt

- apiserver.csr

- apiserver.key

- client.crt

- client.key

- etcd_client.crt

- etcd_client.key

- front-proxy_bak-ca.crt

- front-proxy_bak-ca.key

- front-proxy_bak-ca.srl

- front-proxy_bak-client.crt

- front-proxy_bak-client.key

- front_proxy_ssl.cnf

- sa.key

- sa.pub

- etcd_server.crt

- etcd_server.key

- etcd_client.crt

- etcd_client.key

work节点的证书

- ca.crt

- client.key

- client.crt

需要重新生成,ca.crt和ca.key可以不用重新生成,新的证书需要准备好,老的证书需要备份好。

3、根据ca证书和master_ssl.cnf,etcd_ssl.cnf生成新的master节点和etcd节点证书,根据ca证书和master_ssl.cnf生成work节点的client.crt证书,提前准备好。 master_ssl.cnf包含3个master节点的ip和硬负载vip,etcd_ssl.cnf包含3个etcd节点的ip。

4、拷贝新生成的etcd证书到etcd节点,替换老的证书,重新etcd节点。

5、拷贝新生成的master节点的节点到master节点,替换老的master节点证书,重启master节点的master服务,包括kube-apiserver,kube-scheduler,kube-controller-manager,kubelet,kube-proxy。

6、拷贝新生成的work节点的证书到work节点,替换work节点的证书,并重启node节点的kubelet和kube-proxy服务。

7、验证master节点的相关服务是否正常,包括kube-apiserver,kube-scheduler,kube-controller-manager,kubelet,kube-proxy,etcd服务。node节点的kubelet和kube-proxy服务。

回退,删除新加的2个master节点,将老的备份的证书替换回去,重启master节点和node节点的相关服务

模拟单master单etcd节点 扩展到 3master3etcd

现状:

master1 192.168.0.61

node1 192.168.0.64

改造后

master1:192.168.0.61

master2:192.168.0.62

master3:192.168.0.63

node1: 192.168.0.64

lb1: 192.168.0.71

lb2: 192.168.0.72

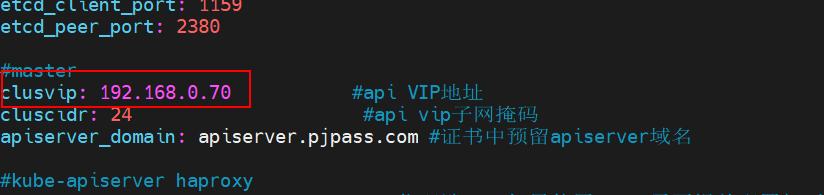

vip: 192.168.0.70

1、备份etcd数据

2、停止所有节点的kube-apiserver,kube-scheduler,kube-controller-manager,etcd,kubelet,kube-proxy服务

3、修改all.yml里的master节点的vip为真实的vip,hosts文件增加master组和etcd组,node组 2个新增的master节点的ip

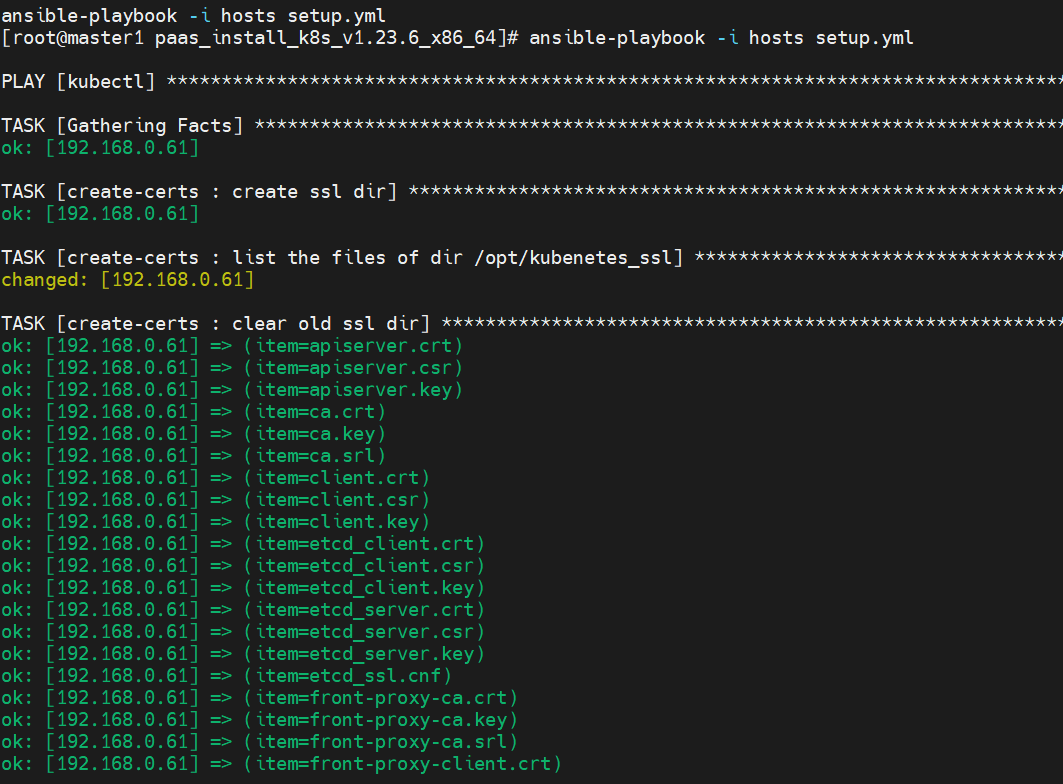

4、备份老的证书文件夹下的文件,执行ansible-playbook -i hosts setup.yml,只包含创建证书这一步

在/opt/kubernetes_ssl下重新生成apiserver.crt

apiserver.csr

apiserver.key

ca.crt

ca.key

ca.srl

client.crt

client.csr

client.key

etcd_client.crt

etcd_client.csr

etcd_client.key

etcd_server.crt

etcd_server.csr

etcd_server.key

etcd_ssl.cnf

front-proxy-ca.crt

front-proxy-ca.key

front-proxy-ca.srl

front-proxy-client.crt

front-proxy-client.csr

front-proxy-client.key

front_proxy_ssl.cnf

master_ssl.cnf

sa.key

sa.pub

证书

单master 单etcd pod pvc sc,需要扩容后数据任然存在

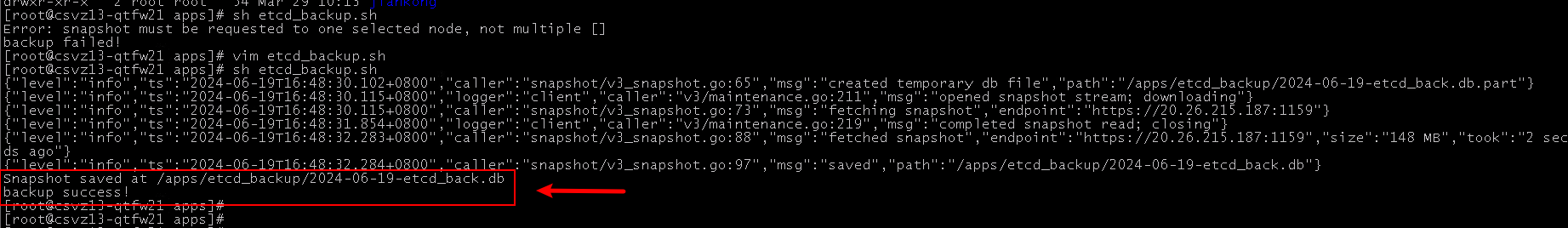

备份etcd数据

[root@master1 apps]# cat etcd_backup.sh

#!/bin/bash

#etcd地址端口,根据环境进行修改

#etcd_url_1="https://10.253.227.180:1183"

#etcd_url_2="https://10.253.227.181:1183"

#etcd_url_3="https://10.253.227.182:1183"

#etcd备份数据路径,根据环境进行修改

bakdata_basedir="/apps/etcd_backup/"

#etcd 开启https的需要,添加ca及etcd证书,根据环境进行修改

cacert_file="/etc/kubernetes/ssl/ca.crt"

etcd_cert_file="/etc/kubernetes/ssl/etcd_server.crt"

etcd_key_file="/etc/kubernetes/ssl/etcd_server.key"

#获取etcd leader地址

leader_url=`ETCDCTL_API=3 sudo etcdctl --endpoints=https://192.168.0.61:1159,https://192.168.0.62:1159,https://192.168.0.63:1159 --cacert=$cacert_file --cert=$etcd_cert_file --key=$etcd_key_file endpoint status -w table | awk -F'|' '$6~"true"{print $2}'`

##echo "leader地址:"$leader_url

#创建备份数据目录

if [ ! -d $bakdata_basedir ]; then

mkdir -p $bakdata_basedir

fi

#开始备份

ETCDCTL_API=3 sudo etcdctl --endpoints="$leader_url" --cacert=$cacert_file --cert=$etcd_cert_file --key=$etcd_key_file snapshot save $bakdata_basedir`date +%Y-%m-%d`-etcd_back.db

if [ "$?" == "0" ]; then

echo "backup success!"

else

echo "backup failed!"

fi

#删除早期备份数据

find /apps/etcd_backup -type f -mtime +60 -exec rm -f {} \;

执行etcd备份

[root@master1 apps]# sh etcd_backup.sh

{"level":"info","ts":"2024-05-11T16:20:13.600+0800","caller":"snapshot/v3_snapshot.go:65","msg":"created temporary db file","path":"/apps/etcd_backup/2024-05-11-etcd_back.db.part"}

{"level":"info","ts":"2024-05-11T16:20:13.606+0800","logger":"client","caller":"v3/maintenance.go:211","msg":"opened snapshot stream; downloading"}

{"level":"info","ts":"2024-05-11T16:20:13.606+0800","caller":"snapshot/v3_snapshot.go:73","msg":"fetching snapshot","endpoint":"https://192.168.0.61:1159"}

{"level":"info","ts":"2024-05-11T16:20:13.705+0800","logger":"client","caller":"v3/maintenance.go:219","msg":"completed snapshot read; closing"}

{"level":"info","ts":"2024-05-11T16:20:13.711+0800","caller":"snapshot/v3_snapshot.go:88","msg":"fetched snapshot","endpoint":"https://192.168.0.61:1159","size":"6.1 MB","took":"now"}

{"level":"info","ts":"2024-05-11T16:20:13.711+0800","caller":"snapshot/v3_snapshot.go:97","msg":"saved","path":"/apps/etcd_backup/2024-05-11-etcd_back.db"}

Snapshot saved at /apps/etcd_backup/2024-05-11-etcd_back.db

backup success!

[root@master1 apps]# ls -la /apps/etcd_backup/

总用量 5932

drwxr-xr-x. 2 root root 37 5月 11 16:20 .

drwxr-xr-x. 7 root root 174 5月 11 09:35 ..

-rw------- 1 root root 6070304 5月 11 16:20 2024-05-11-etcd_back.db

[root@master1 apps]#

备份老的证书文件

cp -rp /etc/kubernetes/ssl //etc/kubernetes/ssl_bak0514

停止master和node节点的k8s相关的服务

ansible -i hosts node -m shell -a "systemctl stop kubelet"

ansible -i hosts node -m shell -a "systemctl stop kube-proxy"

ansible -i hosts master -m shell -a "systemctl stop etcd"

ansible -i hosts master -m shell -a "systemctl stop kube-apiserver"

ansible -i hosts master -m shell -a "systemctl stop kube-controller-manager"

ansible -i hosts master -m shell -a "systemctl stop kube-scheduler"

ansible -i hosts master -m shell -a "systemctl stop kubelet"

ansible -i hosts master -m shell -a "systemctl stop kube-proxy"

修改 setup.yml,hosts,group_var 里面的信息,包括

setup.yml只放开创建证书的这一步,

hosts的master组增加两个新的节点,node组增加两个新的节点,docker组增加两个新的节点,

group_var下的 vip 改为 新申请的vip地址

重新生成证书,包括etcd的相关的证书

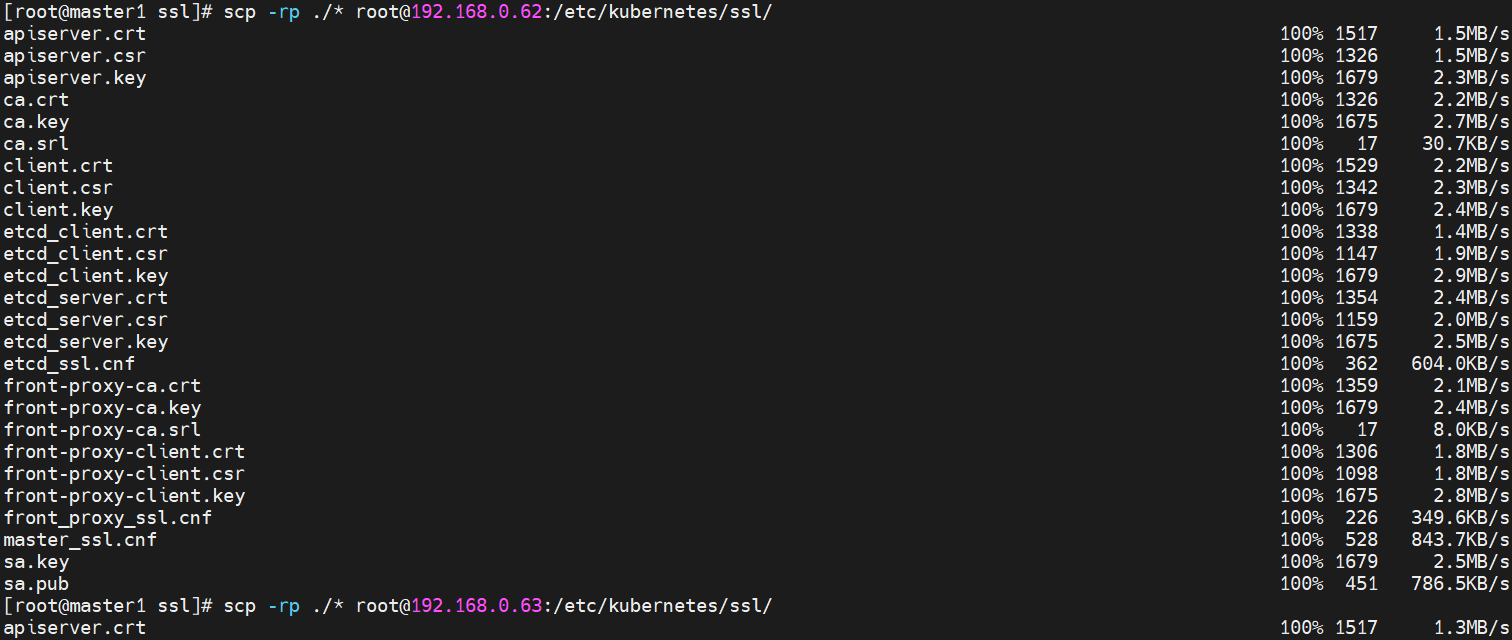

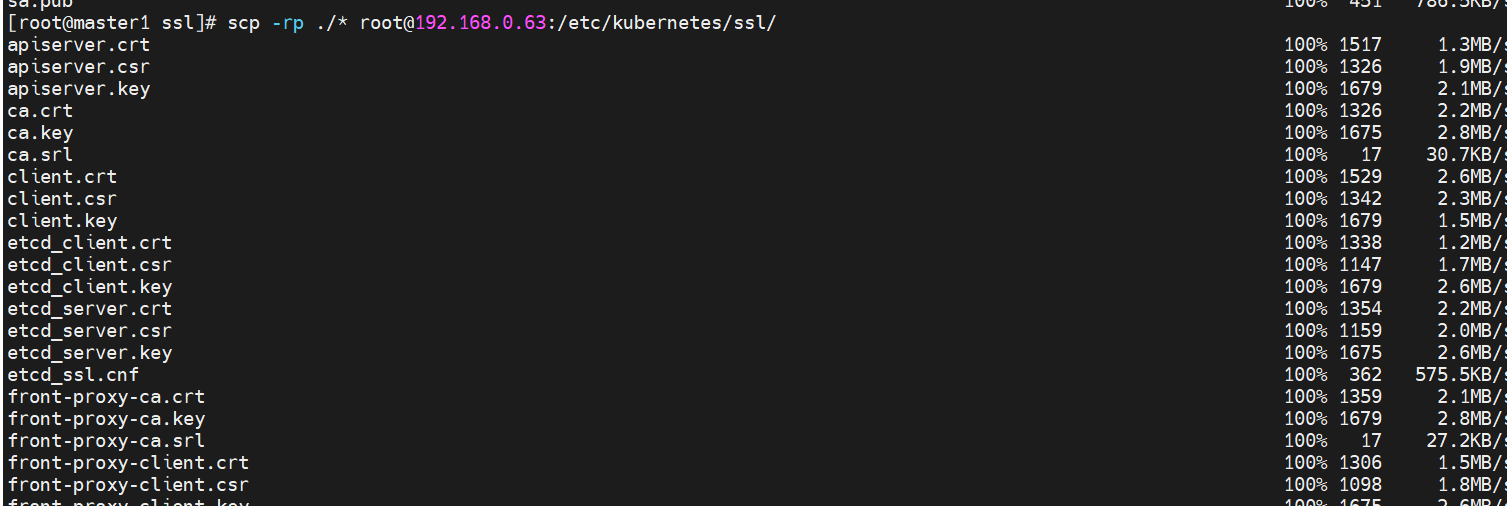

拷贝 /opt/kubernetes_ssl/下的证书信息到 老的和新的master节点的 /etc/kubernetes/ssl/ 下面

备份后删除老的证书文件

cp -rp /etc/kubernetes/ssl/ /etc/kubernetes/ssl_bak/

rm -rf /etc/kubernetes/ssl/*

#把 /opt/kubernetes_ssl/下的证书拷贝到 /etc/kubernetes/ssl/下

# cp -rp /opt/kubernetes_ssl/etcd* /etc/kubernetes/ssl/

# cp -rp /opt/kubernetes_ssl/ca.crt /etc/kubernetes/ssl/

# cp -rp /opt/kubernetes_ssl/ca.key /etc/kubernetes/ssl/

包括

- ca.crt

- ca.key

- etcd_server.crt

- etcd_server.key

- etcd_client.crt

- etcd_client.key

拷贝etcd服务相关文件和证书到master2,master3节点上

scp -rp /usr/bin/etcd root@192.168.0.62:/usr/bin/etcd

scp -rp /usr/bin/etcdctl root@192.168.0.62:/usr/bin/etcdctl

scp -rp /etc/etcd/etcd.conf root@192.168.0.62:/etc/etcd/etcd.conf 后面新加一个改一个的配置

scp -rp /etc/etcd//usr/lib/systemd/system/etcd.service root@192.168.0.62:/usr/lib/systemd/system/etcd.service

scp -rp /etc/kubernetes/ssl/ca.crt root@192.168.0.62:/etc/kubernetes/ssl/ca.crt

scp -rp /etc/kubernetes/ssl/ca.key root@192.168.0.62:/etc/kubernetes/ssl/ca.key

scp -rp /etc/kubernetes/ssl/etcd_server.crt root@192.168.0.62:/etc/kubernetes/ssl/etcd_server.crt

scp -rp /etc/kubernetes/ssl/etcd_server.key root@192.168.0.62:/etc/kubernetes/ssl/etcd_server.key

scp -rp /etc/kubernetes/ssl/etcd_client.crt root@192.168.0.62:/etc/kubernetes/ssl/etcd_client.crt

scp -rp /etc/kubernetes/ssl/etcd_client.key root@192.168.0.62:/etc/kubernetes/ssl/etcd_client.key

systemctl daemon-reload

master2,master3 /data/etcd_data/etcd下 需要没有东西 否则要删除master2,master3 的**/data/etcd_data/etcd**文件夹下的文件

重启老的节点61的etcd服务

systemctl restart etcd

systemctl start etcd

添加新的节点62的etcd

ETCDCTL_API=3 /usr/bin/etcdctl --endpoints=https://192.168.0.61:1159 \

--cacert=/etc/kubernetes/ssl/ca.crt \

--cert=/etc/kubernetes/ssl/etcd_server.crt \

--key=/etc/kubernetes/ssl/etcd_server.key \

member add etcd_0_62 --peer-urls=https://192.168.0.62:2380

ETCDCTL_API=3 /usr/bin/etcdctl --endpoints=https://192.168.0.61:1159 \

--cacert=/etc/kubernetes/ssl/ca.crt \

--cert=/etc/kubernetes/ssl/etcd_server.crt \

--key=/etc/kubernetes/ssl/etcd_server.key \

member add etcd_0_62 --peer-urls=https://192.168.0.62:2380

[root@master1 ssl]# ETCDCTL_API=3 /usr/bin/etcdctl --endpoints=https://192.168.0.61:1159 \

> --cacert=/etc/kubernetes/ssl/ca.crt \

> --cert=/etc/kubernetes/ssl/etcd_server.crt \

> --key=/etc/kubernetes/ssl/etcd_server.key \

> member add etcd_0_62 --peer-urls=https://192.168.0.62:2380

Member bcc22e0a75396cbc added to cluster f6f9624e4f919ae5

ETCD_NAME="etcd_0_62"

ETCD_INITIAL_CLUSTER="etcd_0_61=https://192.168.0.61:2380,etcd_0_62=https://192.168.0.62:2380"

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.0.62:2380"

ETCD_INITIAL_CLUSTER_STATE="existing"

[root@master1 ssl]#

修改61,62的etcd的配置,包括ETCD_INITIAL_CLUSTER信息要包括61和62,ETCD_INITIAL_CLUSTER_STATE都改为existing,修改完重启61,62两个etcd节点

systemctl restart etcd

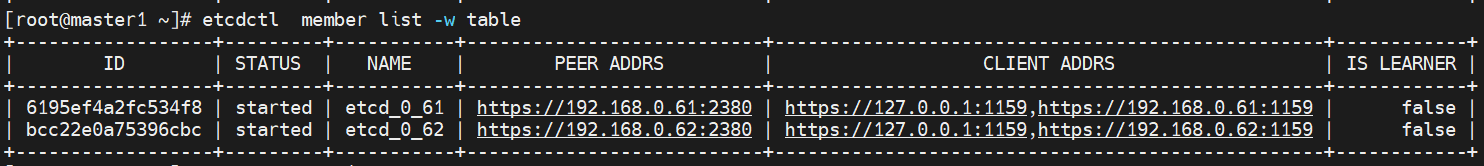

查看member list

[root@master1 ~]# etcdctl member list -w table

+------------------+---------+-----------+---------------------------+--------------------------------------------------+------------+

| ID | STATUS | NAME | PEER ADDRS | CLIENT ADDRS | IS LEARNER |

+------------------+---------+-----------+---------------------------+--------------------------------------------------+------------+

| 6195ef4a2fc534f8 | started | etcd_0_61 | https://192.168.0.61:2380 | https://127.0.0.1:1159,https://192.168.0.61:1159 | false |

| bcc22e0a75396cbc | started | etcd_0_62 | https://192.168.0.62:2380 | https://127.0.0.1:1159,https://192.168.0.62:1159 | false |

+------------------+---------+-----------+---------------------------+--------------------------------------------------+------------+

ETCDCTL_API=3 etcdctl --endpoints=http://192.168.0.61:2382,http://192.168.0.62:2382,http://192.168.0.63:2382 member list -w table

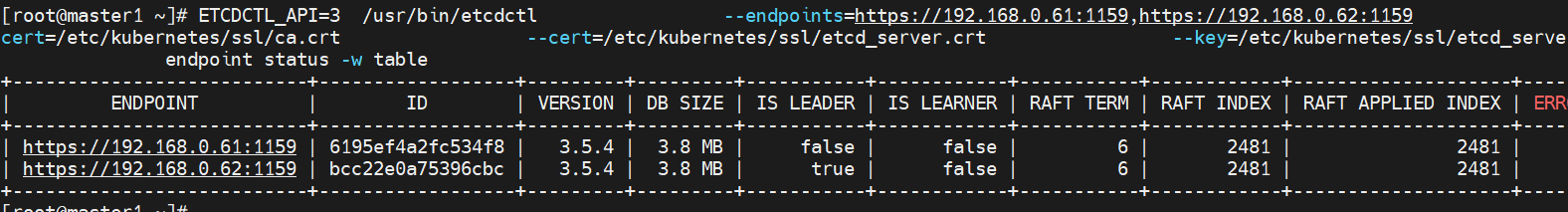

查看状态,正常都是3.8Mb的大小

ETCDCTL_API=3 /usr/bin/etcdctl --endpoints=https://192.168.0.61:1159,https://192.168.0.62:1159 --cacert=/etc/kubernetes/ssl/ca.crt --cert=/etc/kubernetes/ssl/etcd_server.crt --key=/etc/kubernetes/ssl/etcd_server.key endpoint status -w table

ETCDCTL_API=3 /usr/bin/etcdctl --endpoints=https://2:1159 --cacert=/etc/kubernetes/ssl/ca.crt --cert=/etc/kubernetes/ssl/etcd_server.crt --key=/etc/kubernetes/ssl/etcd_server.key endpoint status -w table

ETCDCTL_API=3 etcdctl --endpoints=http://192.168.0.61:2382,http://192.168.0.62:2382,http://192.168.0.63:2382 endpoint status -w table

[root@master1 ~]# ETCDCTL_API=3 /usr/bin/etcdctl \

> --endpoints=https://192.168.0.61:1159,https://192.168.0.62:1159 \

> --cacert=/etc/kubernetes/ssl/ca.crt \

> --cert=/etc/kubernetes/ssl/etcd_server.crt \

> --key=/etc/kubernetes/ssl/etcd_server.key \

> endpoint status -w table

+---------------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+

| ENDPOINT | ID | VERSION | DB SIZE | IS LEADER | IS LEARNER | RAFT TERM | RAFT INDEX | RAFT APPLIED INDEX | ERRORS |

+---------------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+

| https://192.168.0.61:1159 | 6195ef4a2fc534f8 | 3.5.4 | 3.8 MB | false | false | 6 | 2481 | 2481 | |

| https://192.168.0.62:1159 | bcc22e0a75396cbc | 3.5.4 | 3.8 MB | true | false | 6 | 2481 | 2481 | |

+

在61节点上添加第三个etcd节点

ETCDCTL_API=3 /usr/bin/etcdctl --endpoints=https://192.168.0.61:1159 \

--cacert=/etc/kubernetes/ssl/ca.crt \

--cert=/etc/kubernetes/ssl/etcd_server.crt \

--key=/etc/kubernetes/ssl/etcd_server.key \

member add etcd_0_63 --peer-urls=https://192.168.0.63:2380

[root@master1 ~]# ETCDCTL_API=3 /usr/bin/etcdctl --endpoints=https://192.168.0.61:1159 \

> --cacert=/etc/kubernetes/ssl/ca.crt \

> --cert=/etc/kubernetes/ssl/etcd_server.crt \

> --key=/etc/kubernetes/ssl/etcd_server.key \

> member add etcd_0_63 --peer-urls=https://192.168.0.63:2380

Member 3dcc0344ff804247 added to cluster f6f9624e4f919ae5

ETCD_NAME="etcd_0_63"

ETCD_INITIAL_CLUSTER="etcd_0_63=https://192.168.0.63:2380,etcd_0_61=https://192.168.0.61:2380,etcd_0_62=https://192.168.0.62:2380"

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.0.63:2380"

ETCD_INITIAL_CLUSTER_STATE="existing"

修改61和62和63的etcd的配置,包括ETCD_INITIAL_CLUSTER信息要包括61和62和63,ETCD_INITIAL_CLUSTER_STATE都改为existing,修改完重启61,62,63 3个etcd节点,

cd /etc/etcd/

vim /etd.conf

systemctl restart etcd

查看member list

ETCDCTL_API=3 etcdctl --endpoints=http://192.168.0.61:2382,http://192.168.0.62:2382,http://192.168.0.63:2382 member list -w table

[root@master1 etcd]# ETCDCTL_API=3 /usr/bin/etcdctl --endpoints=https://192.168.0.61:1159,https://192.168.0.62:1159,https://192.168.0.63:1159 --cacert=/etc/kubernetes/ssl/ca.crt --cert=/etc/kubernetes/ssl/etcd_server.crt --key=/etc/kubernetes/ssl/etcd_server.key member list -w table

+------------------+---------+-----------+---------------------------+--------------------------------------------------+------------+

| ID | STATUS | NAME | PEER ADDRS | CLIENT ADDRS | IS LEARNER |

+------------------+---------+-----------+---------------------------+--------------------------------------------------+------------+

| 3dcc0344ff804247 | started | etcd_0_63 | https://192.168.0.63:2380 | https://127.0.0.1:1159,https://192.168.0.63:1159 | false |

| 6195ef4a2fc534f8 | started | etcd_0_61 | https://192.168.0.61:2380 | https://127.0.0.1:1159,https://192.168.0.61:1159 | false |

| bcc22e0a75396cbc | started | etcd_0_62 | https://192.168.0.62:2380 | https://127.0.0.1:1159,https://192.168.0.62:1159 | false |

+------------------+---------+-----------+---------------------------+--------------------------------------------------+------------+

[root@master1 etcd]#

ETCDCTL_API=3 /usr/bin/etcdctl --endpoints=https://192.168.0.186:1159,https://192.168.0.187:1159,https://192.168.0.188:1159 --cacert=/etc/kubernetes/ssl/ca.crt --cert=/etc/kubernetes/ssl/etcd_server.crt --key=/etc/kubernetes/ssl/etcd_server.key member list -w table

ETCDCTL_API=3 /usr/bin/etcdctl --endpoints=https://192.168.0.61:1159,https://192.168.0.62:1159,https://192.168.0.63:1159 --cacert=/etc/kubernetes/ssl/ca.crt --cert=/etc/kubernetes/ssl/etcd_server.crt --key=/etc/kubernetes/ssl/etcd_server.key member list -w table

查看etcd健康状态

ETCDCTL_API=3 etcdctl --endpoints=http://192.168.0.61:2382,http://192.168.0.62:2382,http://192.168.0.63:2382 endpoint health -w table

[root@master1 etcd]# ETCDCTL_API=3 /usr/bin/etcdctl --endpoints=https://192.168.0.61:1159,https://192.168.0.62:1159,https://192.168.0.63:1159 --cacert=/etc/kubernetes/ssl/ca.crt --cert=/etc/kubernetes/ssl/etcd_server.crt --key=/etc/kubernetes/ssl/etcd_server.key endpoint health -w table

+---------------------------+--------+-------------+-------+

| ENDPOINT | HEALTH | TOOK | ERROR |

+---------------------------+--------+-------------+-------+

| https://192.168.0.62:1159 | true | 12.985932ms | |

| https://192.168.0.61:1159 | true | 12.078238ms | |

| https://192.168.0.63:1159 | true | 14.896674ms | |

+---------------------------+--------+-------------+-------+

ETCDCTL_API=3 /usr/bin/etcdctl --endpoints=https://192.168.0.186:1159,https://192.168.0.187:1159,https://192.168.0.188:1159 --cacert=/etc/kubernetes/ssl/ca.crt --cert=/etc/kubernetes/ssl/etcd_server.crt --key=/etc/kubernetes/ssl/etcd_server.key endpoint health -w table

ETCDCTL_API=3 /usr/bin/etcdctl --endpoints=https://192.168.0.61:1159,https://192.168.0.62:1159,https://192.168.0.63:1159 --cacert=/etc/kubernetes/ssl/ca.crt --cert=/etc/kubernetes/ssl/etcd_server.crt --key=/etc/kubernetes/ssl/etcd_server.key endpoint health -w table

查看etcd的状态,3个节点的数据大小一直,状态正常

ETCDCTL_API=3 etcdctl --endpoints=http://192.168.0.61:2382,http://192.168.0.62:2382,http://192.168.0.63:2382 endpoint status -w table

ETCDCTL_API=3 /usr/bin/etcdctl --endpoints=https://20.26.215.183:1159 --cacert=/etc/kubernetes/ssl/ca.crt --cert=/etc/kubernetes/ssl/etcd_server.crt --key=/etc/kubernetes/ssl/etcd_server.key endpoint status -w table

ETCDCTL_API=3 /usr/bin/etcdctl --endpoints=https://192.168.0.186:1159,https://192.168.0.187:1159,https://192.168.0.188:1159 --cacert=/etc/kubernetes/ssl/ca.crt --cert=/etc/kubernetes/ssl/etcd_server.crt --key=/etc/kubernetes/ssl/etcd_server.key endpoint status -w table

ETCDCTL_API=3 /usr/bin/etcdctl --endpoints=https://192.168.0.61:1159,https://192.168.0.62:1159,https://192.168.0.63:1159 --cacert=/etc/kubernetes/ssl/ca.crt --cert=/etc/kubernetes/ssl/etcd_server.crt --key=/etc/kubernetes/ssl/etcd_server.key endpoint status -w table

[root@master1 etcd]# ETCDCTL_API=3 /usr/bin/etcdctl --endpoints=https://192.168.0.61:1159,https://192.168.0.62:1159,https://192.168.0.63:1159 --cacert=/etc/kubernetes/ssl/ca.crt --cert=/etc/kubernetes/ssl/etcd_server.crt --key=/etc/kubernetes/ssl/etcd_server.key endpoint status -w table

+---------------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+

| ENDPOINT | ID | VERSION | DB SIZE | IS LEADER | IS LEARNER | RAFT TERM | RAFT INDEX | RAFT APPLIED INDEX | ERRORS |

+---------------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+

| https://192.168.0.61:1159 | 6195ef4a2fc534f8 | 3.5.4 | 3.8 MB | false | false | 8 | 2487 | 2487 | |

| https://192.168.0.62:1159 | bcc22e0a75396cbc | 3.5.4 | 3.8 MB | true | false | 8 | 2487 | 2487 | |

| https://192.168.0.63:1159 | 3dcc0344ff804247 | 3.5.4 | 3.9 MB | false | false | 8 | 2487 | 2487 | |

+---------------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+

[

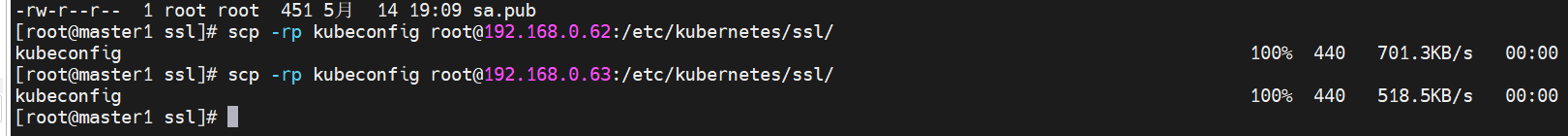

拷贝证书相关文件到各个主机 master和node节点

拷贝相关文件到master1和master2节点

master1和master2节点都要拷贝

scp -rp /usr/bin/kube-apiserver root@192.168.0.62:/usr/bin/kube-apiserver

scp -rp /usr/bin/kube-controller-manager root@192.168.0.62:/usr/bin/kube-controller-manager

scp -rp /usr/bin/kube-scheduler root@192.168.0.62:/usr/bin/kube-scheduler

scp -rp /usr/bin/kubectl root@192.168.0.62:/usr/bin/kubectl

scp -rp /etc/kubernetes/ssl root@192.168.0.62:/etc/kubernetes/ssl

scp -rp /etc/kubernetes/ssl/* root@192.168.0.62:/etc/kubernetes/ssl/*

mkdir -p /var/log/kubernetes

scp -rp /etc/kubernetes/apiserver root@192.168.0.62:/etc/kubernetes/apiserver

scp -rp /etc/kubernetes/controller-manager root@192.168.0.62:/etc/kubernetes/controller-manager

scp -rp /etc/kubernetes/scheduler root@192.168.0.62:/etc/kubernetes/scheduler

scp -rp /etc/kubernetes/kubeconfig_bak root@192.168.0.62:/etc/kubernetes/kubeconfig_bak

scp -rp /usr/lib/systemd/system/kube-apiserver.service root@192.168.0.62:/usr/lib/systemd/system/kube-apiserver.service

scp -rp /usr/lib/systemd/system/kube-controller-manager.service root@192.168.0.62:/usr/lib/systemd/system/kube-controller-manager.service

scp -rp /usr/lib/systemd/system/kube-scheduler.service root@192.168.0.62:/usr/lib/systemd/system/kube-scheduler.service

mkdir -p /etc/kubernetes/pki/ca_ssl

scp -rp /etc/kubernetes/pki/ca_ssl root@192.168.0.62:/etc/kubernetes/pki/ca_ssl

systemctl daemon-reload

systemctl start kube-apiserver

systemctl start kube-controller-manager

systemctl start kube-scheduler

systemctl status kube-apiserver

systemctl status kube-controller-manager

systemctl status kube-scheduler

kubectl create clusterrolebinding root-cluster-admin-binding --clusterrole=cluster-admin --user=admin 2>/dev/null

后面直接执行 master node colico coredns即可

拷贝master节点证书到/etc/kubernetes/ssl下面后,执行 重启master和node节点的各个服务

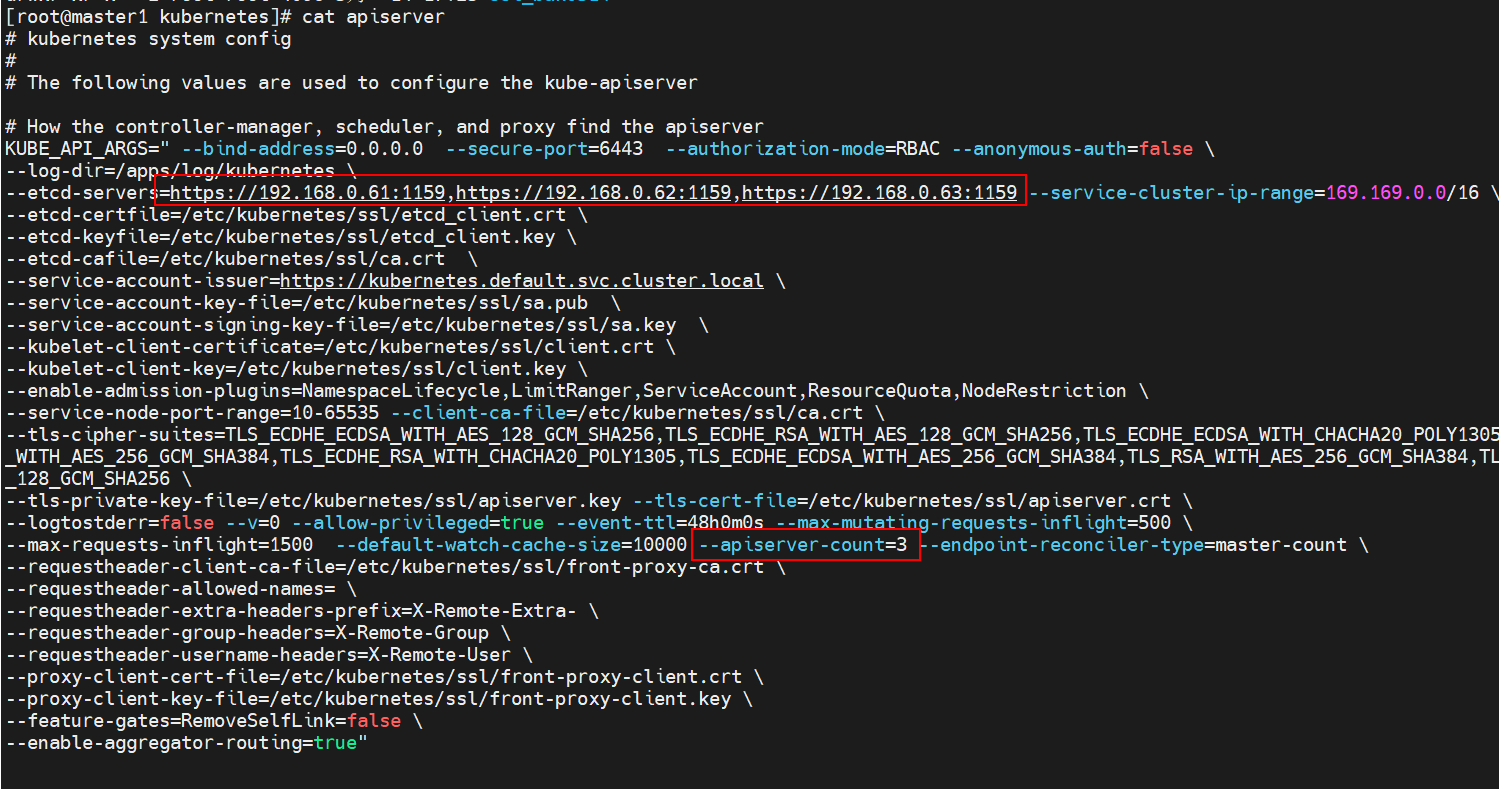

apiserver要修改为下面的内容

ansible -i hosts master -m shell -a "systemctl restart etcd"

ansible -i hosts master -m shell -a "systemctl restart kube-apiserver"

ansible -i hosts master -m shell -a "systemctl restart kube-controller-manager"

ansible -i hosts master -m shell -a "systemctl restart kube-scheduler"

ansible -i hosts master -m shell -a "systemctl restart kubelet"

ansible -i hosts master -m shell -a "systemctl restart kube-proxy"

ansible -i hosts node -m shell -a "systemctl restart kubelet"

ansible -i hosts node -m shell -a "systemctl restart kube-proxy"

cailco理论会自动安装,也可以执行下calico和coredns的安装步骤,注意新加节点和老的节点的udp 14789要能互相通信,否者新加的master节点和集群的其他节点的网络不通

查看集群的pod,正常

k get pod -A

查看集群的状态,正常

k get cs

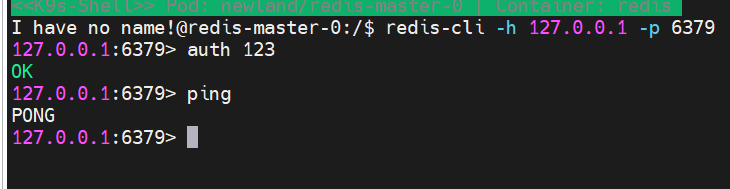

数据都还在 包括之前单节点创建的redis服务 redis的pvc nfs-client-provisioner服务,至此扩容为3master3etcd完成,且原数据未丢失

POD状态正常

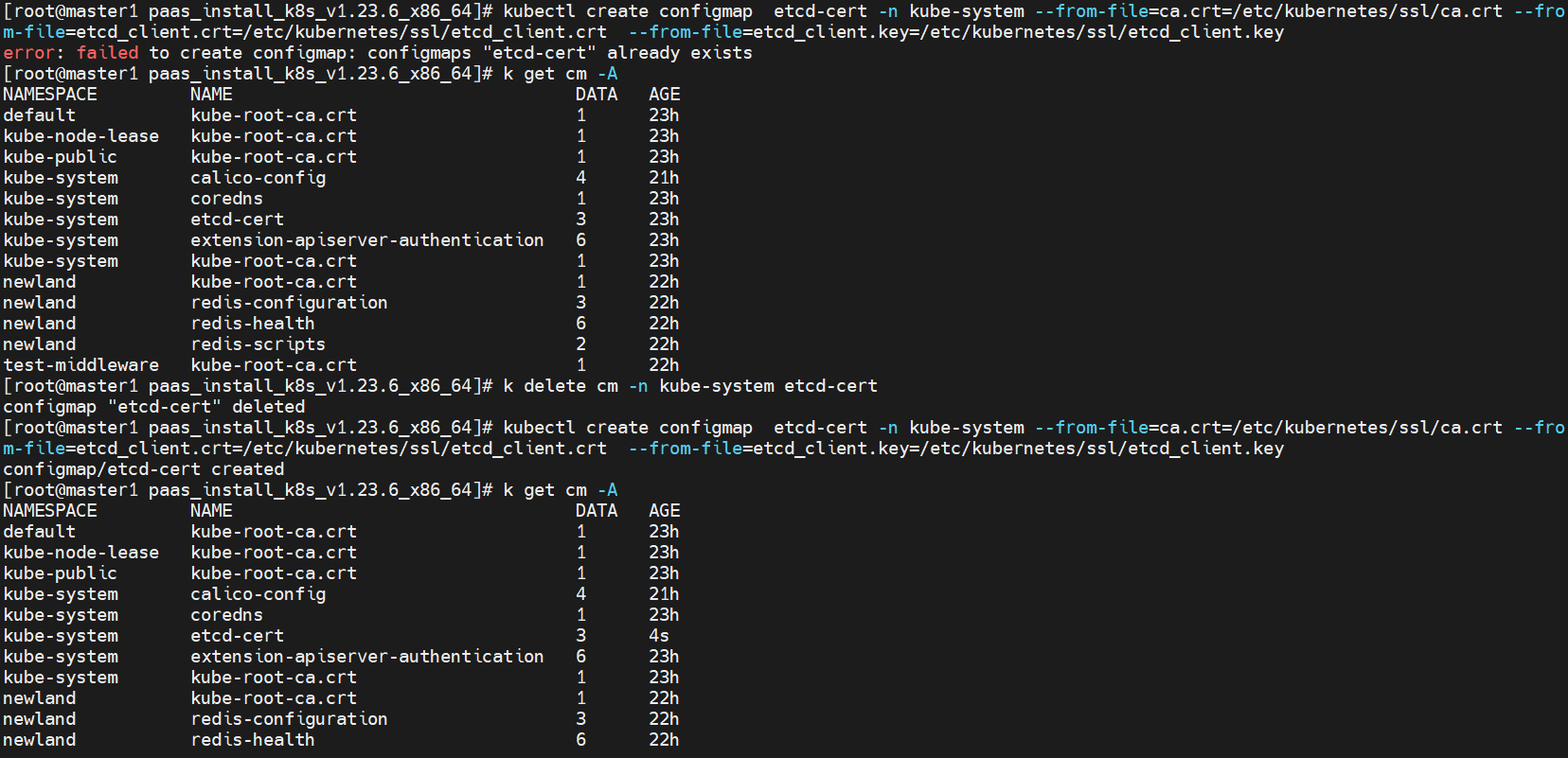

注意coredns的cm 要重新生成 不然etcd会报错coredns有连接因为证书错误导致连接被拒绝

k delete cm -n kube-system etcd-cert

kubectl create configmap etcd-cert -n kube-system --from-file=ca.crt=/etc/kubernetes/ssl/ca.crt --from-file=etcd_client.crt=/etc/kubernetes/ssl/etcd_client.crt --from-file=etcd_client.key=/etc/kubernetes/ssl/etcd_client.key

kubectl apply -f /apps/cluster_modules_setup/coredns-1.7.1/coredns-v1.7.0-with-etcd.yaml

systemctl restart etcd

systemctl status etcd

1.上一次变更已经进行etcd3节点改造,3master节点的相关系统文件已经拷贝过去了,这次要改master节点的apiserver配置和/etc/kubernetes/ssl下的证书更新,node节点的/etc/kubernetes/ssl下的证书更新。

2.停止所有node和master节点的集群组件,包括kubelet,kube-proxy,kube-apiserver,etcd,kube-controller-manager,kube-scheduler。

3.重新生成ca,etcd,apiserver等证书在/opt/kubernetes_ssl下

4.拷贝/opt/kubernetes_ssl下相关证书到每一个master和node节点的/etc/kubernetes/ssl下进行替换证书操作。master拷贝/opt/kubernetes_ssl下的所有文件,node节点拷贝ca.crt,calient.crt,client.key,kubeconfig文件

5.重新启动所有node和master节点的集群组件,包括kubelet,kube-proxy,kube-apiserver,etcd,kube-controller-manager,kube-scheduler。

6.观察集群状态和pod状态是否正常。

重点需要重启docker,否则云IDE打不开工作空间